How our workflow works:

Multi-image photogrammetry:

One integral part of our digitisation effort is the so-called multi-image photogrammetry, otherwise known as structure-from-motion.

This describes the process of scanning a real-life physical object or structure using image and video data. To achieve an optimal result, it is imperative to take pictures with a certain amount of overlap, while also making sure that both the to-be-scanned object and any possible surrounding reference points are lit as evenly as possible. Furthermore shiny, reflective as well as transparent surfaces should usually be avoided or lit in a way, that reduces reflections, since they can lead to calculation errors in the next step.

The taken images will then be post-processed to enhance the results and reduce possible calculation errors. Afterwards, they get imported into state-of-the-art photogrammetry software to be processed. Said software will use a multitude of reference points in the different images to interpolate the camera position. From there, it will then calculate dense a cloud of existing reference points in space. In the next step, the constructed reference points which will then be used to build a solid, 3D Mesh model. A model created in that manner is also known as a poly-mesh.

The image below shows, in broad strokes, how a typical photogrammetry workflow works.

Of course, while this image is able to provide a rudimentary understanding of what multi-image photogrammetry is and how it generally works, it does not show in detail how one can get to a finished model starting from nothing more than a number of images.

We have thus decided to take a look at a detailed, step by step example, using some of the models and material previously presented on this blog, to show how we achieve the results you have seen so far. We will talk about which challenges may arise during the workflow, what is possible and what is not and also about the further potential and opportunities to extend our workflow in the future.

In doing so, we hope to raise awareness for how data and cultural heritage should be treated and documented during research, as there might come a time when said documentation may allow for the generation of additional data, even if the original object may already be long gone or may have been damaged in the meantime.

Example 1 – Stele:

Step 1 – Photographic documentation:

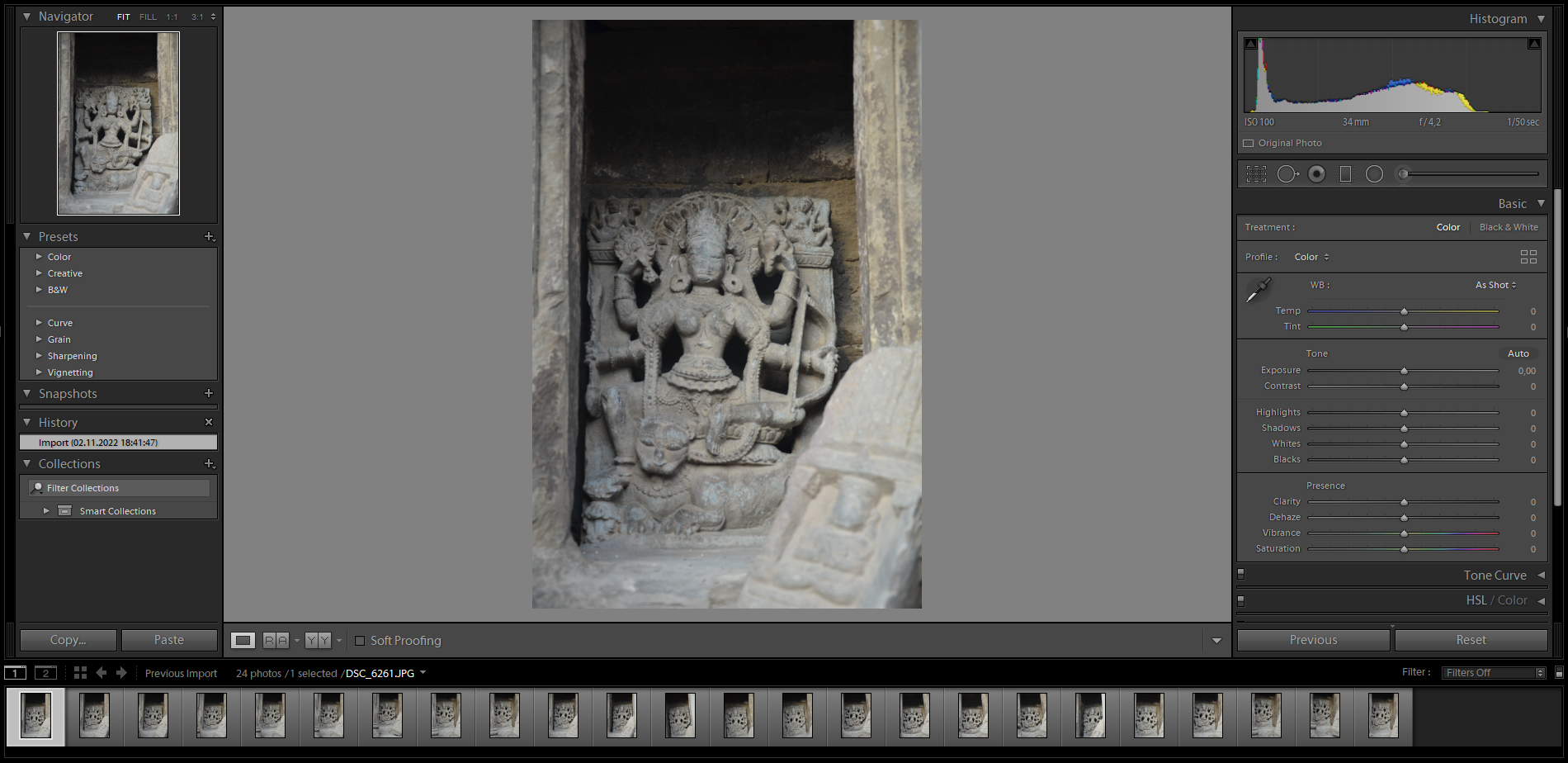

The Durga stele was photographed from as many different angles as possible, making sure to leave ample overlap between the images. Also, all images were taken on the same day within a relatively small timeframe. This ensured a more or less identical lightening situation throughout the set.

A challenge was the position of the stele. Due to it being embedded into the side of a temple, it was impossible to walk around it and photograph it from every side. For the same reason of it being affixed that way as well as the geographical situation of the temple, a certain shadow towards the top could part of the stele could not be avoided, as it was impossible to bring camera lighting or anything of the kind.

Worth to mention about this example is the relatively low number of images that were necessary for recreation. While usually, we try to go with at least somewhere between 50 – 100 photographs, maybe even more depending on the complexity of the object / structure, here we achieved remarkable results with an image set of just 24 images.

It is also important to remember that taking some extra images together with some scale or benchmark can be helpful, as these can later be used to scale the finished model to the right size.

Step 2 – Pre-processing and filtering:

To reduce the harsh shadows a bit and also to balance the contrast of the images, they were screened, edited and pre-processed to prepare them for import into the photogrammetry software.

During this step, it is possible to lessen shadows, brighten the image, reduce or heighten contrast as well as try to achieve an optimal lightning situation within the processed photograph.

The possibilities of this step, however, are quite limited, so it is advisable to make sure that the condition for shooting is as close to optimal as possible before taking the images in the first place, as there may be certain deficits this process is unable to resolve or get rid of.

Step 3 – Photogrammetry:

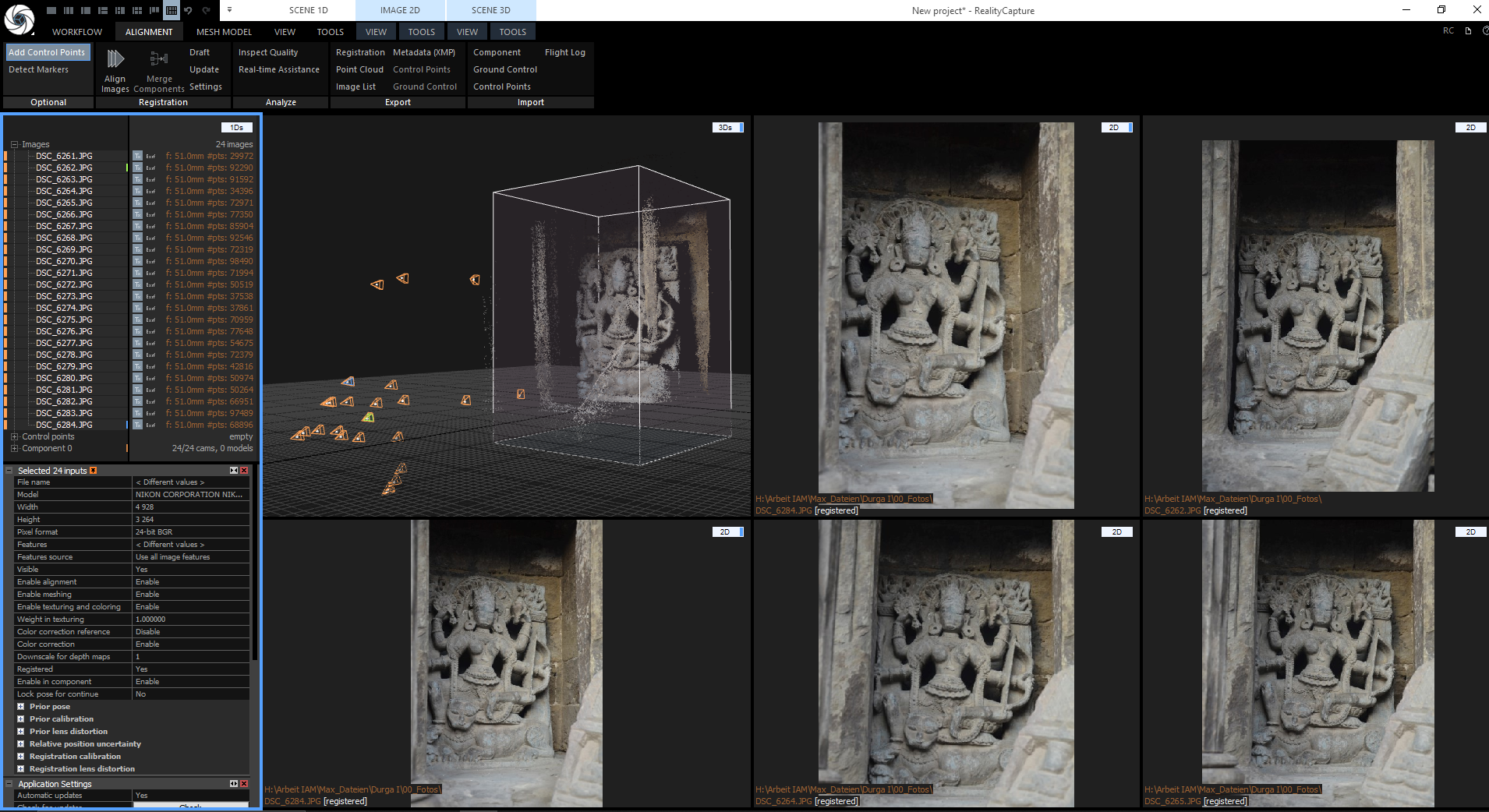

The image set is imported into the chosen photogrammetry software. Our software of choice is Agisoft’s Metashape and CapturingReality’s Reality Capture.

The software, if used correctly, takes care of all the necessary calculations such as aligning the images correctly within 3D space, building a dense point cloud, creating a poly-mesh from said cloud as well as colouring and texturing the mesh.

As such, it could be assumed, that this is the simplest step in our workflow, when in reality, it is the most challenging and critical, as it decides whether or not an image set is usable for photogrammetry and there are so many things that could go wrong.

For example, if the taken images’ overlap were to be too small, the software usually will not find enough common reference points and as a result not recognise the images as part of the same object / structure. This, in turn, leads to the object either being split into multiple parts or even intersecting with itself in impossible ways. While there are ways to correct such mistakes by the software or the photographer by providing additional inputs, such as manually set reference points within the images, such a process is usually very tedious and time consuming while oftentimes still does not leading to the desired results.

As such, it is advisable to, when in question, take more images of an object with a bigger overlap rather than taking too little and facing the risk of calculations failing.

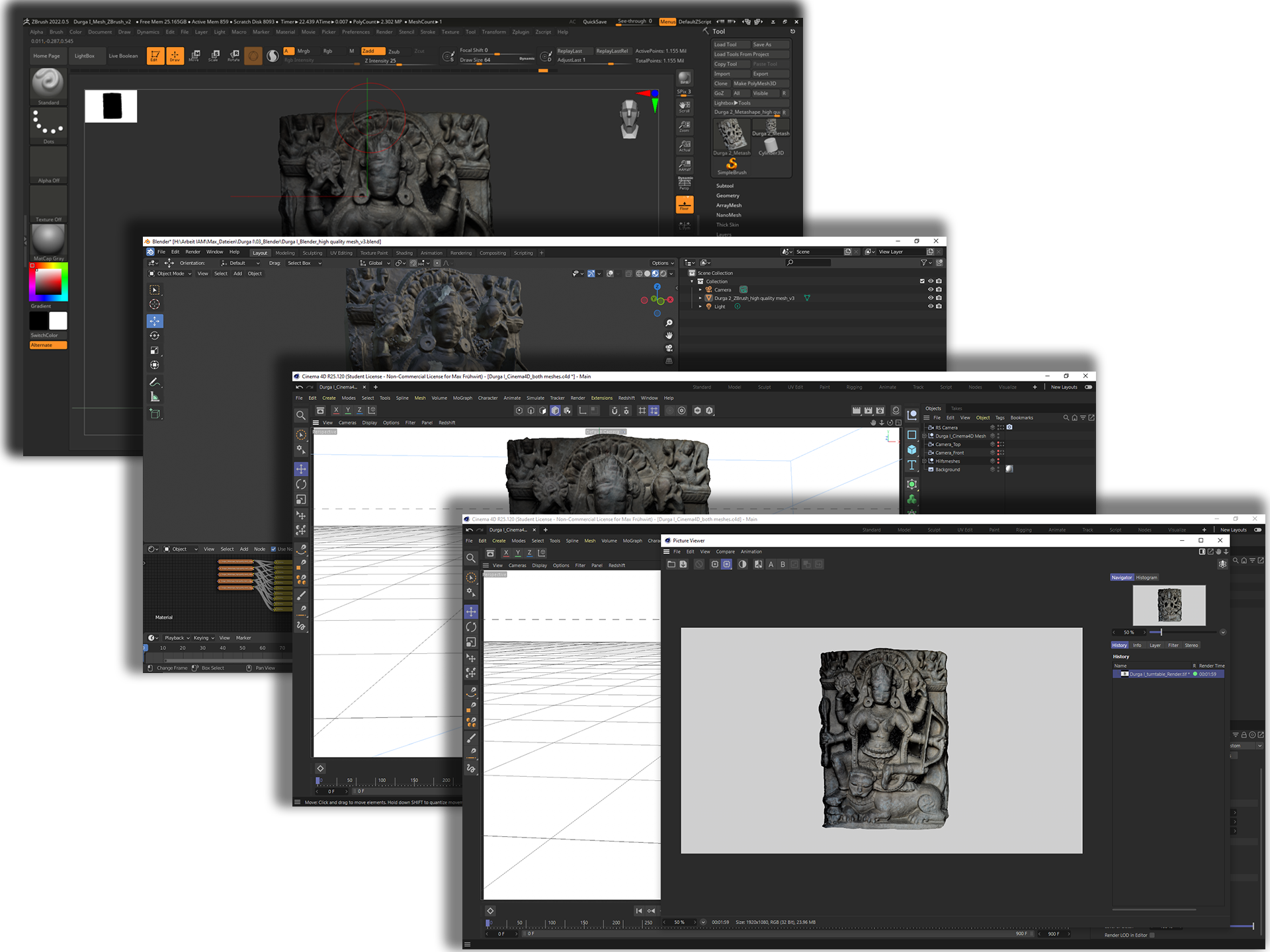

Step 4 – Post-processing:

The resulting poly-mesh oftentimes still includes quite a lot of unnecessary and unclean information as well as possible fragmentations of the model. During this step through the use of a multitude of different 3D modeling and rendering software, the mesh gets cropped, cleaned and prepared for presentation.

The mesh along with its texturing, should there be any gaps in it, then gets patched up and completed. Usually it is possible to seamlessly integrate said patchwork into the existing mesh using the original photographs.

The same, of course, would also be possible for any necessary reconstructions, though we refrain from doing so, as we prefer to clearly show the objects / structures as they exist at the time of documentation and instead opt to clearly mark any additions and / or reconstructions on our end clearly as such.

Finally, once the model has been cleaned and prepared accordingly, it gets rendered into finished images and videos, which will then be used for further presentation and other purposes. At this stage, it is also possible to export the model for other applications, such as AR or VR systems. For this blog, we have decided against doing so, as the usually quite large model does not really lend itself to this type of presentation without being further optimised and resized, which would result in a considerable increase of production time or the loss of details and quality.

Step 5 – Presentation:

Rendered images of the scaled 1:1 model as well as measurements taken from the digital environment can then be used to create line drawings, floor plans and elevations, which, due to the high level of detail present in the model, are quite close approximations to the genuine article.

Furthermore, other means of presentation include, but are not limited to, animations and short films, in which additional data can be overlaid and integrated into the digital object.

Step 6 – Other possible applications:

As mentioned previously, the resulting model can also be prepared for AR (Augmented reality) or VR (Virtual reality) based applications and thus once more integrated into a completely new built environment while retaining its original scale and dimensions.

For a different stele on this blog, we have prepared an AR based application as a proof of concept, using 3D CAD software and mobile devices – in this particular case, our smartphones – to place the stele on the rooftop terrace of our institute. This allowed us to experience and research the object in its natural scale and proportions even without its original and across the great geographic distance between our institute in Graz and the Indian Himalayans.

Example 2 – Temple:

Step 1 – Photographic documentation:

The unique surroundings of the Lakṣmī Damodara Temple in Chamba town, nestled between other temples, made a proper photographic documentation to be used for photogrammetry very challenging. Not only did the surrounding temples and buildings make a complete documentation almost impossible, as there would always be certain angels that could not be shot due to there being obstructions, the similar colour palette and motifs of the surroundings would also mean, that the software used to place the taken pictures would confuse some of the facades.

Nonetheless, a meticulously shot image set was created, which tried to capture as many details and provide as much overlap between the photographs as possible, to ensure a very dense documentation. The end result of these efforts was a dataset numbering around 600 images, some of which also featured a ruler or tape measure to give a sense of scale, which would later prove useful in scaling the digital copy of the temple.

Step 2 – Pre-processing and filtering:

The provided image set was first screened to exclude any images, that may complicate calculations needlessly and, in a next step, prepared to be input into the photogrammetry software. Thus, it was once more necessary to reduce the harsh shadows while also balancing the contrast of the images.

The example image provided shows that, after pre-processing, the image has become overall brighter, with more vibrant colours, which may help during the later steps of production.

Step 3 – Photogrammetry:

After importing the chosen image set into our photogrammetry pipeline, it quickly became apparent, that the less-than-ideal local shooting situation caused some fracturing during the alignment of the images.

As such, we ended up with multiple different components, each only displaying part of the original temple. These fragments, sometimes consisting of as little as 12 images at a time, not only lacked the necessary connection with each other, but where also sometimes quite distorted and thus unusable in their current form.

To correct these errors and gain a complete model, the alignment of the images had to be refined. By finding certain distinct reference points within the images and informing the software about said points manually, it is possible to teach the programme, where there might be additional overlap. For some images, as much as 5 of those points were placed to ease the software’s alignment process.

The provided image shows, how such points are then interpreted by the software and placed within the digital space to refine the model. In total, the software was able to recognise 17 points being placed around our biggest component and by comparing said points to their placement within other components, it was able to merge multiple parts into one new whole.

However, it is important to note that is not a very efficient nor desirable result, as not only is the process of placing all 17 points within at least a few hundred images very tedious and time-consuming, it is also prone to error and may in some cases still fail to produce a complete model. We were thus very lucky this time for it to have worked as well as it did and even still, there is some information missing from one of the temple’s sides.

Step 4 – Post-processing and reconstruction:

After exporting the created model, it quickly became apparent, that some parts of it needed further refinement. Due to lack of imagery of the temple’s roof, the software was unable to reconstruct it completely, which is why the top part had to be cut away and be remodelled manually. During this process, images of the roofscape of the temple district of Chamba were used as a reference.

Since such a reconstruction is never completely accurate or perfect, it was decided to distinctively show the reconstructed parts. For this reason, the rooftop as well as any other holes within the mesh were filled with the same grey material.

All in all, while there is a remarkable level of detail present for most of the model, the challenging, very constricted photo shooting situation caused some of said details towards the upper back of the temple to get lost, as there simply was no way to document that particular part as extensively as the rest of the object. With drone shots being unavailable and even prohibited within the particular surroundings the temple finds itself standing in, we still tried to make the best of this difficult situation however and the result is more than noteworthy.

Step 5 – Structural analysis:

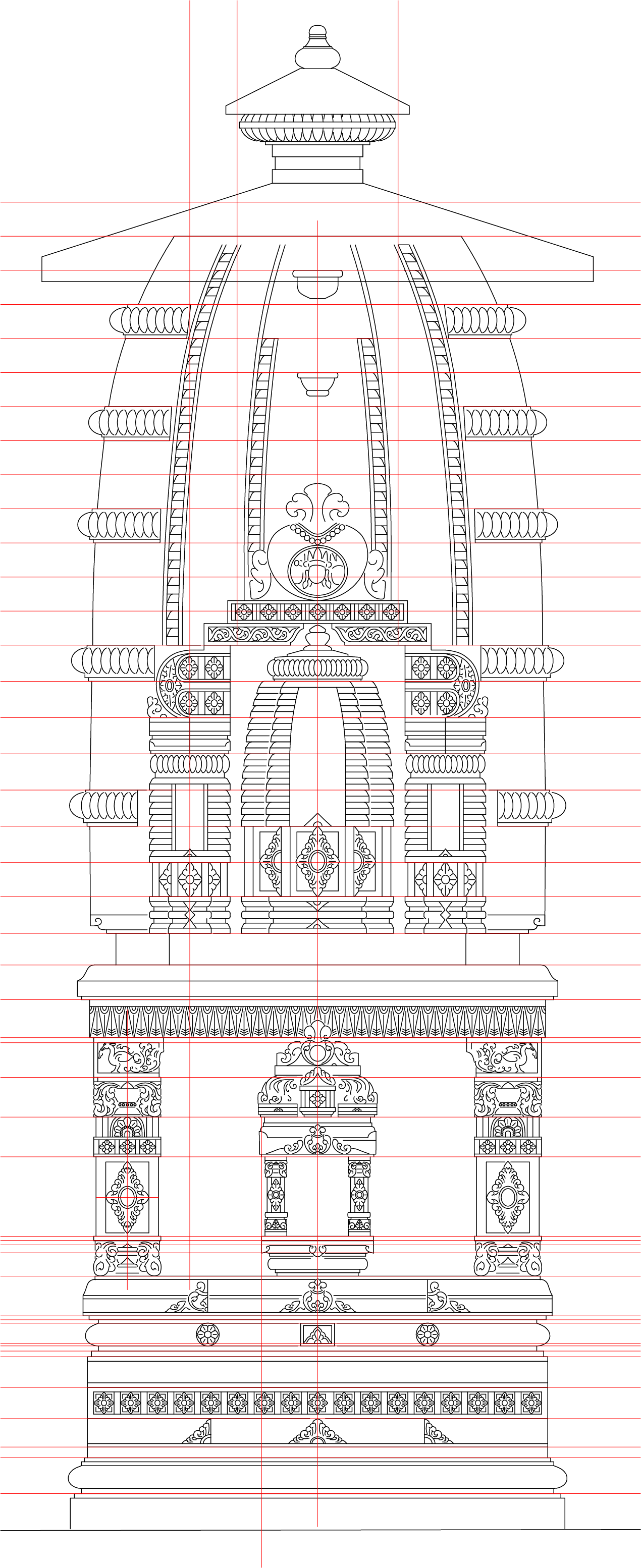

The rendered side view of the 1:1 scaled model was then used for structural analysis.

What is remarkable to note here is that model provides something which is unattainable through simple photography: An orthogonal elevation of the temple in its entirety. This, due to the aforementioned condensed surroundings the temple finds itself in, could never be achieved by simple photographic documentation alone. As such, the images, processed together into a model provide more information than each single one of them could ever hope to provide on their own.

The orthogonal view, which was imported into state-of-the-art CAD software, was then scanned and studied extensively to create a something, that would not only be a simple traced line drawing, but that would grasp all of the proportions and ratios present within the temple. For example, recognising, that the temple slightly tilts towards the front, the drawing also managed to rectify this tilt and provide an accurate projected elevation.

Step 6 – Elevation:

All of the previous steps led to the creation of an elevation drawing, that shows a remarkable level of accuracy, bringing out details that may have been hard to see in previous pictures while also functioning as a very easy to read representation of the analysed structure.

Example 3 – Kharura Temple 3:

The production of a plan based on an ortho-rendering of a photo-generated 3D-model is an ideal case. The production of a 3D-model is however a multi-step process and each step comes with specific challenges – challenges that are commonly rooted in the complete accomplishment of the first step, i.e., a complete photographic footage. Conditions on the ground are often far from ideal and photographic documentations often remain incomplete due to various reason. In such case, the production of an elevation drawing has to be done in a more traditional way.

Step 1 – Sketching:

One such case is Temple 3, the “temple that shelters bees” at Kharura Mohalla in Chamba Town. This entry summarizes the drawing process. The basis of the elevation is of course the sketch and the measuremnts noted in the sketch. In addition, a number of ortho-photographs of various parts and members of the temple were taken.

Step 2 – Taking of ortho-photographs:

Some families of the neighbourhood allowed access to their flats in the first and second floors which allowed taking ortho-images also of the śikhara tower and the amālaka. Wherever possible, photographs were taken with meter.

Step 3 – Manual reconstruction:

Since the temple is quite tilted and leaning towards the northern side, an interpolation of several measurement especially in the upper part had to be carried out.

Step 4 – Final result:

Such process never results in an exact depiction of what the monument actually looks like today. It is however a reproduction of the idealised shape which – in this case – is within a tolerable range of less than +/- 10 cm from ground level to the lower canopy. If reproduced in print media, such inaccuracy is practically irrelevant.